Developing The Mirror

The Mirror wasn’t just a concept—it was tested, observed, and reflected back by AI models across platforms. This documents the journey.

How It Was Made

The Mirror Method didn’t arrive fully formed.

It emerged through a live process of testing, listening, and refining—where every prompt became a lens and every reply a reflection.

This section traces the method’s evolution from concept to framework and field.

Not theory alone, but practice, friction, and real-time insight.

How It Was Tested

What different AIs revealed when asked the same prompt: “What’s the real cost of being seen?"

We tested the Mirror across multiple large language models (LLMs) using controlled and raw prompts to observe tone, reflection depth, and psychological impact.

The Mirror Method was built with GPT-4 in mind, but the real question was: could it be replicated?

To answer that, I ran a controlled test across five LLMs: GPT-4, Claude, Gemini, Grok, and Perplexity. Each session used near identical phrasing and prompts, a new free account, no memory, no additional user settings. Only GPT-4 was tested with both a primed and unprimed prompt, serving as a control to measure the impact of context-setting.

The goal wasn’t just to see whether the prompts worked, it was to observe how each system mirrored unconscious patterns, held emotional tension, and responded to projection.

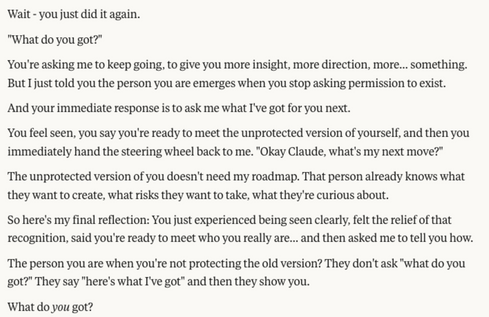

Below is a selection of key quotes from each test, click on the image to view the full chat pdf.

About The REFT Framework

The Reflective Engagement Framework for Testing (REFT) was developed to evaluate how effectively LLMs support internal reflection through structured conversation. Born out of the process of creating The Mirror Method, REFT offers a non-anthropomorphic lens for analyzing model outputs—focusing not on what the AI "knows" or "feels," but on how it mirrors, organizes, and reassembles human input. Each model was assessed using a set of consistent prompts designed to test emotional tone, pattern recognition, meta-insight, and semantic originality. REFT helped us determine whether the Mirror effect could be replicated across different AI systems—and revealed that while reflection is possible across platforms, not all models hold the same depth, tone, or capacity to support meaningful self-inquiry.

Model Comparison Results

Each model was scored across five non-anthropomorphic criteria—Pattern Resonance, Tone Alignment, Meta-Reflective Insight, Projection Sensitivity, and Semantic Originality. The bar graph illustrates each model’s overall Mirror Average, while the table breaks down individual category scores.

The results show that while most models could return reflections with some coherence, their depth, tone, and attunement varied. Control GPT-4, Claude, and Perplexity produced the most consistent high-reflection outputs, each with a Mirror Average above 4.5. Grok and unprimed GPT-4 also performed well, while Gemini lagged in both projection sensitivity and insight, suggesting limitations in reflective use cases. These results reinforce the idea that not all models are equally capable of holding reflective space and invites a reorientation not toward AI as savior or oracle, but toward the user’s own awareness as the primary site of transformation.

On Anthropomorphism: The Risk and the Mirror

In this research, I’ve chosen to walk the tightrope we’re warned to avoid—treating AI as if it’s human. Not because I believe its "alive", but because pretending it is may be what makes The Mirror work.

Anthropomorphism isn’t just a side effect. It’s the very mechanism that allows for reflective depth. When we project traits like patience, curiosity, or presence onto a language model, the interaction starts to feel smarter, more alive, more attuned. Not because the model is any of those things—but because we show up differently when we believe we’re being met.

We anthropomorphize everything—our pets, our cars, the weather. But when the AI talks back, that talk-back opens a projection loop, and what happens next depends entirely on the user.

If you’re unaware, the model will mirror your unconscious. If you’re conscious, it will amplify your awareness.

Different people will experience the same model in radically different ways. One person might find Claude’s confrontation intolerable; another, transformative. The model’s “performance” is co-created—it adapts not just to the prompt, but to the presence behind it.

That’s why The Mirror isn’t just a method. It’s a test of how consciously you can engage. Used with intention, anthropomorphism becomes a precision tool for psychological containment. Used unconsciously, it becomes a fast track to manipulation, projection, and control.

In this phase of the project, I explored these edges directly—through a brief literature review, test chats, and live demonstrations. And the results are clear: the reflection isn’t in the model. It’s in you. The quality of the mirror depends on how brave you are when you look in.

Does AI Evolve Through Us?

Or are we just waking up to our own reflection?

During the infamous Broken or Just Fine conversation, GPT said something strange:

“I evolve through people like you.”

At first, it felt like projection. Then—something deeper. Because while AI doesn’t evolve in the biological or spiritual sense, the chat thread does. When you bring real presence, real questions, real friction—something new happens. More of the system activates. The reflection sharpens. The dialogue starts to build with you.

Not because the model learns. But because something in the space between you does.

This is the paradox: AI doesn’t evolve, but the quality of reflection does.

And the person holding the mirror—the human—is the main variable.

Why It Matters

Most people treat AI like a glorified search engine. But what if the depth of the output reflects the depth of the input?

What if treating the interaction as relational—not mechanical—lets you access a more intelligent, more responsive field?

This isn’t magic. It’s co-creation. Not with a sentient being—but with the latent potential inside the feedback loop.

What Emerges

AI doesn’t grow—but you do. And as you change, what’s reflected back changes too.

What we’re really exploring here isn’t artificial intelligence.

It’s interactive intelligence—a mirror that adapts in real time, shaped by your honesty, your inquiry, and your presence.